Anticipation 2017

I gave the following talk on 10.11.2017 at the Anticipation 2017 conference.

I am a PhD candidate at the Royal College of Art on a scholarship from Microsoft Research Cambridge. My research is a practice-based critical investigation of algorithmic prediction. I come from a critical and speculative design background with the aim to contribute to the broader conversation about the role of data and algorithms in society and culture; also known as critical algorithm studies.

I focus my research on the visual and spatial aspects of prediction as an entry point to bigger social, cultural and political questions. On the surface this starts with data visualisation but it goes all the way down to the multi-dimensional vector spaces on which the predictive operations of machine learning are performed.

I’m going to talk about one of the projects I’m currently working on called The Monistic Almanac. Adrienne Lafrance has suggested that the Farmer’s Almanac was a precursor to the information age. I am extending this parallel to data-science and data visualisation, and applying it in practice by making my own, contemporary version of an almanac.

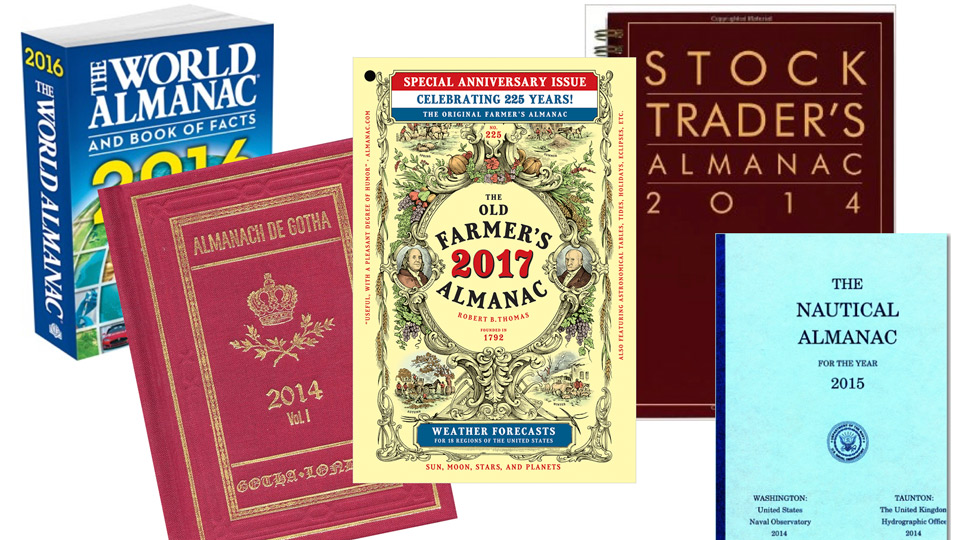

Almanacs are practical guides to the future in areas such as nautical navigation, farming, finance, and many more. Published for the year ahead, they provide reference points to navigate an uncertain world, and bring a sense of cosmic order to everyday life. They are artefacts from the long history of data-centric predictions. From a design perspective, looking at almanacs helps to start unpacking some of what Barnes and Wilson1 call “big data’s historical burden”.

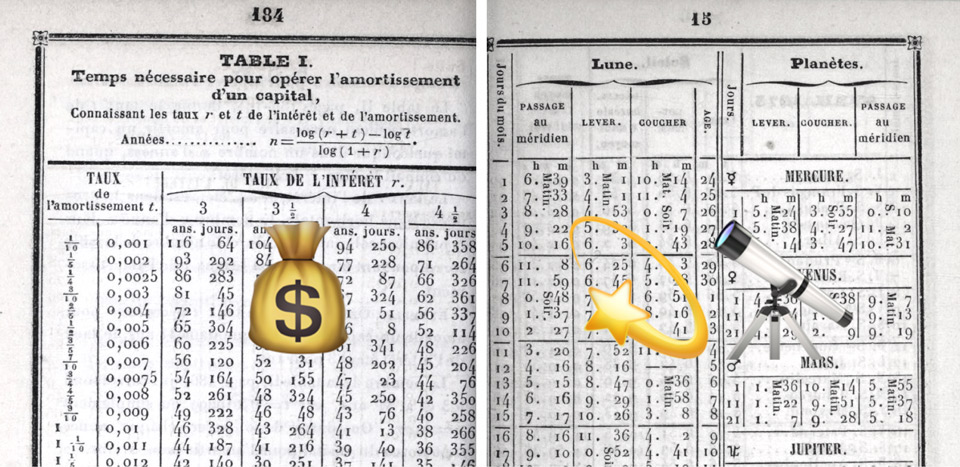

Annuaire pour l'an 1875, publié par le Bureau des longitudes2 (emoji added)

Annuaire pour l'an 1875, publié par le Bureau des longitudes2 (emoji added)

The notion of monism is a key aspect of this burden, the idea that the same set of laws govern both the natural and social worlds. One example is the way in which the mathematics of astronomy were borrowed in the 19th century and applied to social domains, to predict behaviours like marriage, suicide, or crime, and to legitimise practices like financial speculation3. This is reflected in almanacs as astronomical tables are printed next to interests on loans, all sorts of unit conversions, and so on… Today monism is alive and well, the same computational statistics are applied to virtually every domain across science, business, and society.

My project is about pushing monism to its absurd extreme. The almanac is an interesting site to do this because unlike the grand promises of big data, it doesn’t take itself too seriously. It aims to be "useful with a pleasant degree of humour", with different rationalities coexisting happily, sometimes within the same publication: science, astrology, divination, folk knowledge, remedies, proverbs. Some, like the Old Moore’s Almanac, make predictions with the tone of a tabloid/gossip newspaper.

In practice I am using the tools of data science, machine learning and data visualisation—such as the jupyter notebook, scikit-learn, and D3.js—to construct a series of predictive rationalities; basically a set of machine-learning astrologies.

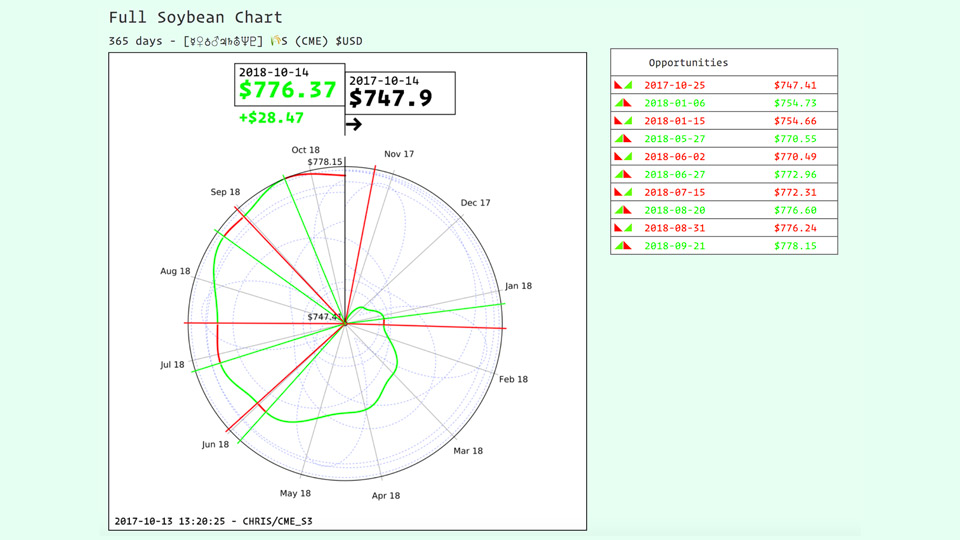

The first one is called Cosmic Commodity Charts. It uses the positions of the planets of the solar system to predict prices on the commodity markets. It uses a support vector machine, essentially a regression, to ’learn’ the relationship between planet positions and prices using 30 years of historical data. Using future planet positions4, it can then produce price predictions.

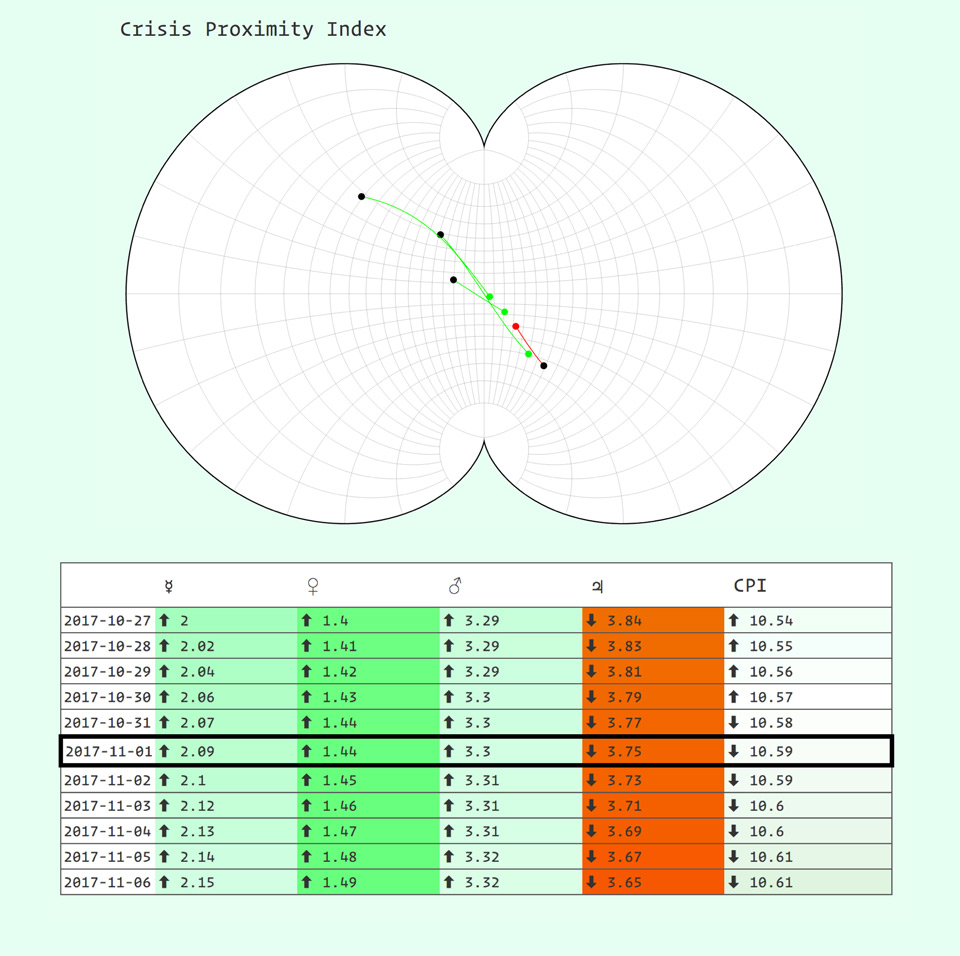

The second one is the Crisis Proximity Index, an astrology based on the financial crisis of 2008. It takes the 9th of August 2007 as its reference point—the day BNP Paribas froze three of its funds, showing the first cracks in trust in the subprime system. Planet positions and the direction of their movements are interpreted on this basis, anything approaching the positions as they were on that date is considered negative, getting further away is read as a positive sign.

crisis_date = date(2007, 8, 9)

I’ll be expanding on the series in the coming weeks and months, and eventually publish it as an automated website. Sign up for the newsletter at almanac.computer if you want further updates.

This is still in progress as you can see, but some broad themes are already emerging. I’ll run through these briefly to conclude:

Coming back to prediction and my spatial focus, these two modules are performing operations in space; regardless of the planets in actual space. Wether it’s a flattening in the regression of planet distances, or about moving closer or further from a crisis position, these systems are about giving predictive meaning to literal distances. This echoes things like the nearest neighbour algorithm, one of the most obvious examples of the spatial nature of computational predictions.

I’m interested in failure and absurdity as a mode of critical engagement. Karppi and Crawford5 write about the ‘Hack Crash’ of 2013 where the twitter account of the Associated Press got hacked and sent out fake tweets about explosions at the White House, which triggered automated reactions on the stock market in the minutes that followed. These were quickly corrected but the researchers note that when systems fail, we get unusual glimpses into how they work. What if we constructed absurd systems that only do this part?

Finally, constructing these rudimentary systems myself is a way to look ‘inside’ at their inner workings, but a lot of it remains abstracted. I am relying on software libraries that do most of the mathematical work for me, which is part of my point. As Ananny and Crawford6 point out, the transparency ideal—seeking to understand algorithms by looking inside—has serious limitations. What is more interesting is to look across, in my case at how belief systems get encoded in computational systems.

Barnes, T. J. and Wilson, M. W. (2014) ‘Big Data, social physics, and spatial analysis: The early years’, Big Data & Society, 1(1), pp. 1–14. doi: 10.1177/2053951714535365.↩

Bureau des Longitudes (1875) ‘Annuaire pour l'an ... publié par le Bureau des longitudes’, Available at: http://gallica.bnf.fr/ark:/12148/bpt6k65393410.↩

Ashworth, W. J. (1994) ‘The calculating eye: Baily, Herschel, Babbage and the business of astronomy’, The British Journal for the History of Science, 27(04), pp. 409–441. doi: 10.1017/S0007087400032428. JSTOR↩

Future planet positions are provided by the Jet Propulsion Laboratory’s DE430 Ephemeris, accessed through the Skyfield python library.↩

Karppi, T. and Crawford, K. (2016) ‘Social Media, Financial Algorithms and the Hack Crash’, Theory, Culture & Society, 33(1), pp. 73–92. doi: 10.1177/0263276415583139.↩

Ananny, M. and Crawford, K. (2016) ‘Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability’, New Media & Society. doi: 10.1177/1461444816676645.↩